“Echoing” is a fully self-contained tracking and analysis system. It uses a camera to detect and track people in a room, a directional loudspeaker to target selected individuals with sound, and AI-based analysis to generate speculative narratives about them.

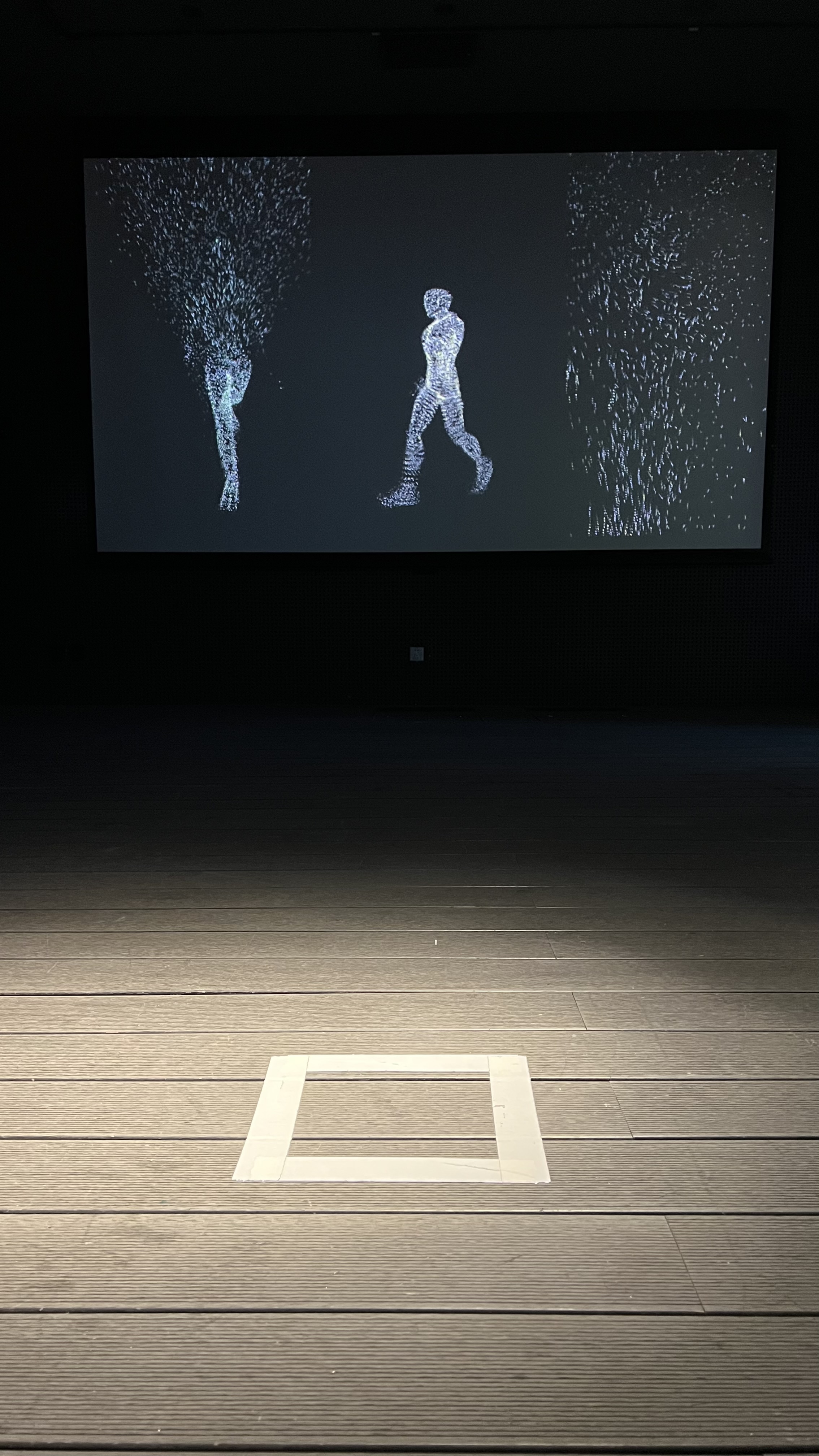

As visitors enter the exhibition space, a soundscape plays. The directional speaker scans the room, tagging individuals with sound in a game-like manner. One person is randomly selected, visually scanned, and their image appears on a monitor along with descriptive details. A language model then generates and speaks a narrative based solely on appearance and perceived traits.

These spoken texts speculate on identity, values, income, habits, and environmental responsibility, producing a form of algorithmic judgment that feels personal yet anonymous. Afterward, the system moves on to another individual.

The work draws direct connections to U.S. military “signature strikes,” in which individuals are identified and evaluated not by identity, but by behavioral patterns and visual data. “Echoing” makes such mechanisms of automated observation, inference, and judgment tangible within a shared public space.